WSO2 Carbon products comes with in-built web-SSO authenticators. Within minutes, you can enable web-SSO for any WSO2 Carbon server using WSO2 Identity Server as the IDP. In this blog post we are using, OpenSSO/OpenAM as the IDP and do the configuration.

Pre-requisites

1. Download and install openAM/openSSO [download the

war file from here]

2. Download the WSO2 product.

Setting up the environment

Configuring OpenSSO/OpenAM

openSSO provides two mechanisms to register an service provider,

- Creating a SP fedlet

- Setting up a SP using a meta file called sp.xml

In this post I'm using the latter approach.

- Configure the sp.xml file.

- The given sp.xml sample file uses, https://localhost:9443/acs as the redirection URL. Configure it according to your environment. https:///acs

- EntityID element of the sp.xml should match the corresponding value of ‘ServiceProviderID’ in the authenticators.xml file

urn:oasis:names:tc:SAML:2.0:nameid-format:transient

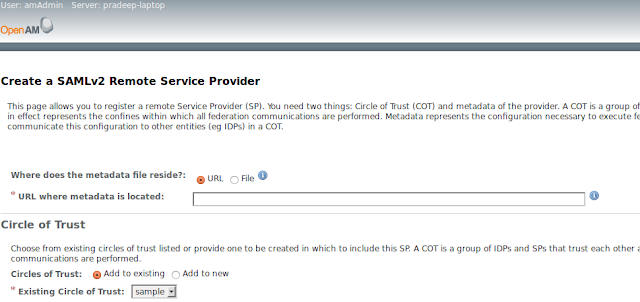

2. Go to Common Tasks -> Register Remote Service Provider link and select the sp.xml as the file to uploaded and select a Circle of Trust.

3. Go to Federation > entity providers in the openSSO management console and select the

newly registered service provider.Select/tick response signing attribute.

Under name ID format list, make sure you specify, ‘transient’ and ‘unspecified’ name ID

formats.

Setting up WSO2 Carbon Server

1. Enable the SSO authenticator and configure the IDP URL in authenticators.xml found under

$CARBON_HOME/repository/conf/security/authenticators.xml

10

/carbon/admin/login.jsp

https://localhost:9443/acs

http://localhost:8080/opensso/SSOPOST/metaAlias/idp<

/Parameter>

opensso

Change the following params accordingly,

- ServiceProvideID - This can be any identifier. Doesn’t have to be a URL. However the configured value should be equal to the value we configured in the ‘’ in sp.xml

- IdentityProviderSSOServiceURL - The URL of your IDP

- idpCertAlias - This is the certificate that get used during response validation from the IDP. OpenSSO servers’ public key should be imported to the Carbon servers keystore with the alias name ‘opensso’

Exporting/Importing Certificates

Add the public key of the selected circle of trust in to the Carbon keystore(wso2carbon.jks) found

under $CARBON_HOME/resources/security/wso2carbon.jks. You can use Java keytool to do that.

-Exporting a public key

Here we will be using the default shipped openSSO keystore certificate. It has the alias name of ‘test’

and typically located in /home/opensso/opensso/keystore.jks. The default password is ‘changeit’. To

export the public key of ‘test’,

keytool -export -keystore keystore.jks -alias test -file test.cer

The public key will get stored in ‘test.cer’ file. you can view the certificate content with the command,

keytool -printcert -file test.cer

- Importing a public key to the wso2carbon.jks

Now import the ‘test.cer’ into Carbon key stores found under $CARBON_HOME/repository/resources/security/wso2carbon.jks

keytool -import -alias opensso -file test.cer -keystore wso2carbon.jks

view the imported certificate using the command

keytool -list -alias opensso -keystore wso2carbon.jks -storepass wso2carbon

Testing the Environment

Try accessing the carbon management console. (eg. https://localhost:9443/carbon) The call will

redirect you to IDP (openSSO login page). Enter username and the password in the openSSO login

page. Once you properly authenticated you will redirect back you to the WSO2 Carbon product login

page as a logged in user.

Please note: The authenticated user has to be in the Carbon servers’ user-store for authorization

(permission) purposes. Since the above described test environment does not share the same user

store between IDP (openSSO server) and SP (Carbon server) i created a user called ‘amAdmin’ in

Carbon server user store. Otherwise there will be a authorization failure during the server login.

"[2013-06-17 10:22:04,601] ERROR

{org.wso2.carbon.identity.authenticator.saml2.sso.SAML2SSOAuthenticator} - Authentication

Request is rejected. Authorization Failure."

Please Note: As of this writing, there are interop issues with released version of Carbon servers and OpenSSO. I have created a JIRA

here, along with the patch to rectify the issue. Future Carbon releases will fix this issue.

.jpeg)